21 Ways to Visualize a Timeseries

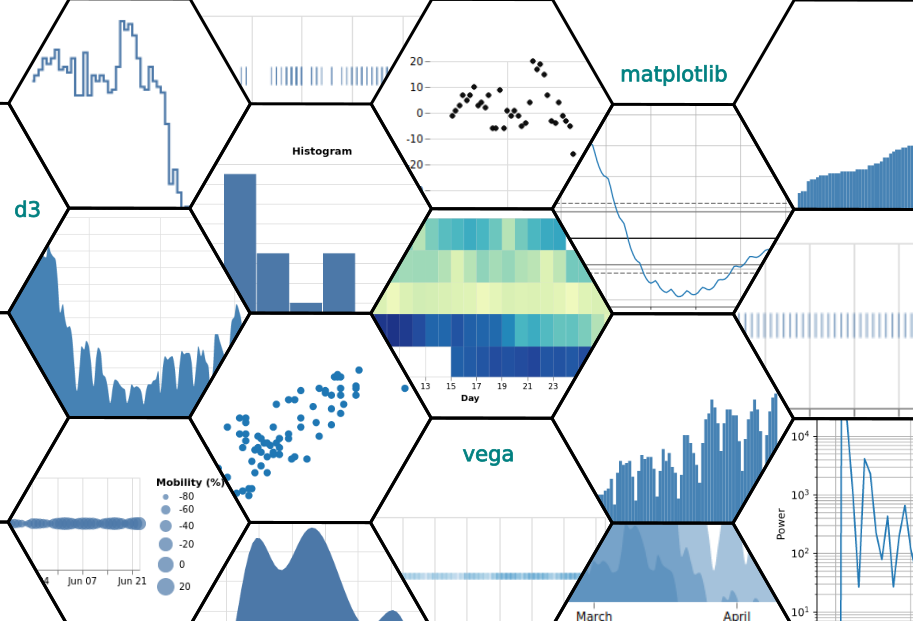

We explore a variety of distinct ways to visualize the same simple dataset. The post is an excursion into the fundamentals of visualization - a partial deconstruction of the process that highlights some common techniques and associated issues.

Course Objective

This course is a deep-dive into the structure of visualizations, in particular visualizations of timeseries data. The course is now live at the Academy.

Pre-requisites

Knowledge of basic visualization techniques and mathematical notation of functions and maps. Familiarity with data series and their usage in data science.

Summary of the Course

What we aim to achieve in this course is to “deconstruct” how typical and less common visualization of timeseries work. In the first instance we decompose the visualization process into:

- A mathematical transformation which (optionally) may operate on the raw data

- A visual transformation which converts quantitative data into a visual space

We apply this “recipe” to a large number of visualizations (21 in total), using always the same simple data series.

21 ways to visualize a simple timeseries

- A Numerical Table is also a Visualization

- Visualizing Observation Times

- Visualizing Observation Values

- Color Plots of Measurement Values

- Bubble Plots of Measurement Values

- Scatter Plots and their Limitations

- Linear Line Plot and Continuity

- The Step Plot and Discreteness

- The Smooth Plot: Pleasant but with a stinging tail

- The Area Chart: Filling up space to our advantage

- New Visualization Horizons with the Horizon Chart

- Abusing the Bar Chart Concept

- A Sorted Bar Chart and the power of mathematical transformations

- The Histogram Transformation

- The (Probability) Density Plot and Mathematical Models

- The Lag Plot and Persistence

- Autocorrelation and further Arcana

- The Phase Diagram and Dynamical Systems

- Displaying data in the Frequency domain

- A Calendar is also a Visualization

- The Plot Thickens: The Weekly Calendar Version

Get Started with the Course

The course is available at the Academy.

Comment

If you want to comment on this post you can do so on Reddit or alternatively at the Open Risk Commons. Please note that you will need a Reddit or Open Risk Commons account respectively to be able to comment!