tailRisk Library 0.2 Release

TailRisk Library Release

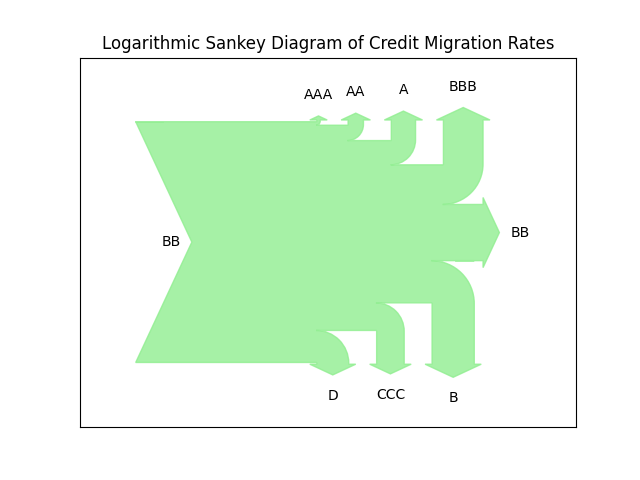

tailRisk is a C++ library for the calculation of various tail risk measures. Tail Risk is a core concept in Quantitative Risk Management , relevant in particular in Market Risk and Credit Risk Management. Tail Risk is both an informal term denoting unusually occurring tail events and a more precise term denoting concrete classes of risk measures