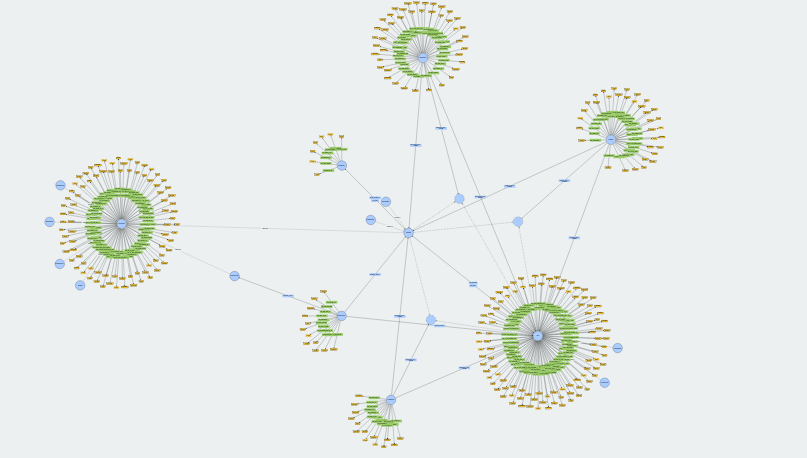

The Open Risk Manual as Android App

With a new software release we aim to make the Open Risk Manual more accessible by creating an Android app version. This post explains a bit more what this is about.

The Open Risk Manual is now available also as an Android App

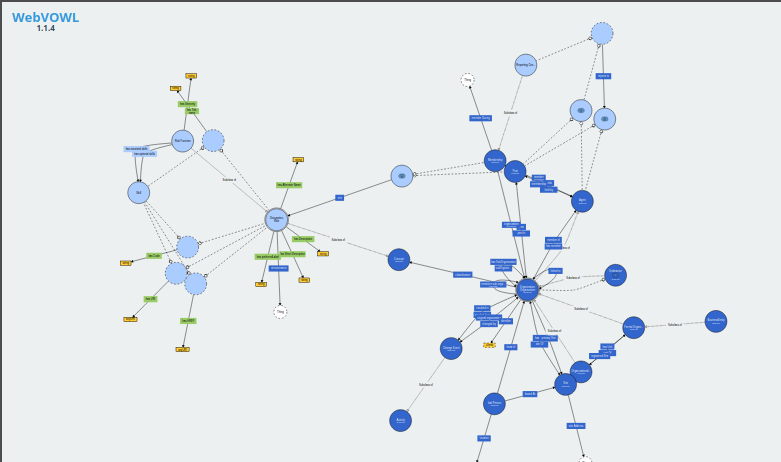

The Open Risk Manual is an open online repository of information (wiki) about risk management in all its forms. The Manual is developed and maintained by Open Risk. Our objective is to create a comprehensive, detailed, authoritative collection of risk management resources that are easily accessible by anybody, anywhere - well, network access is currently required!